Overview

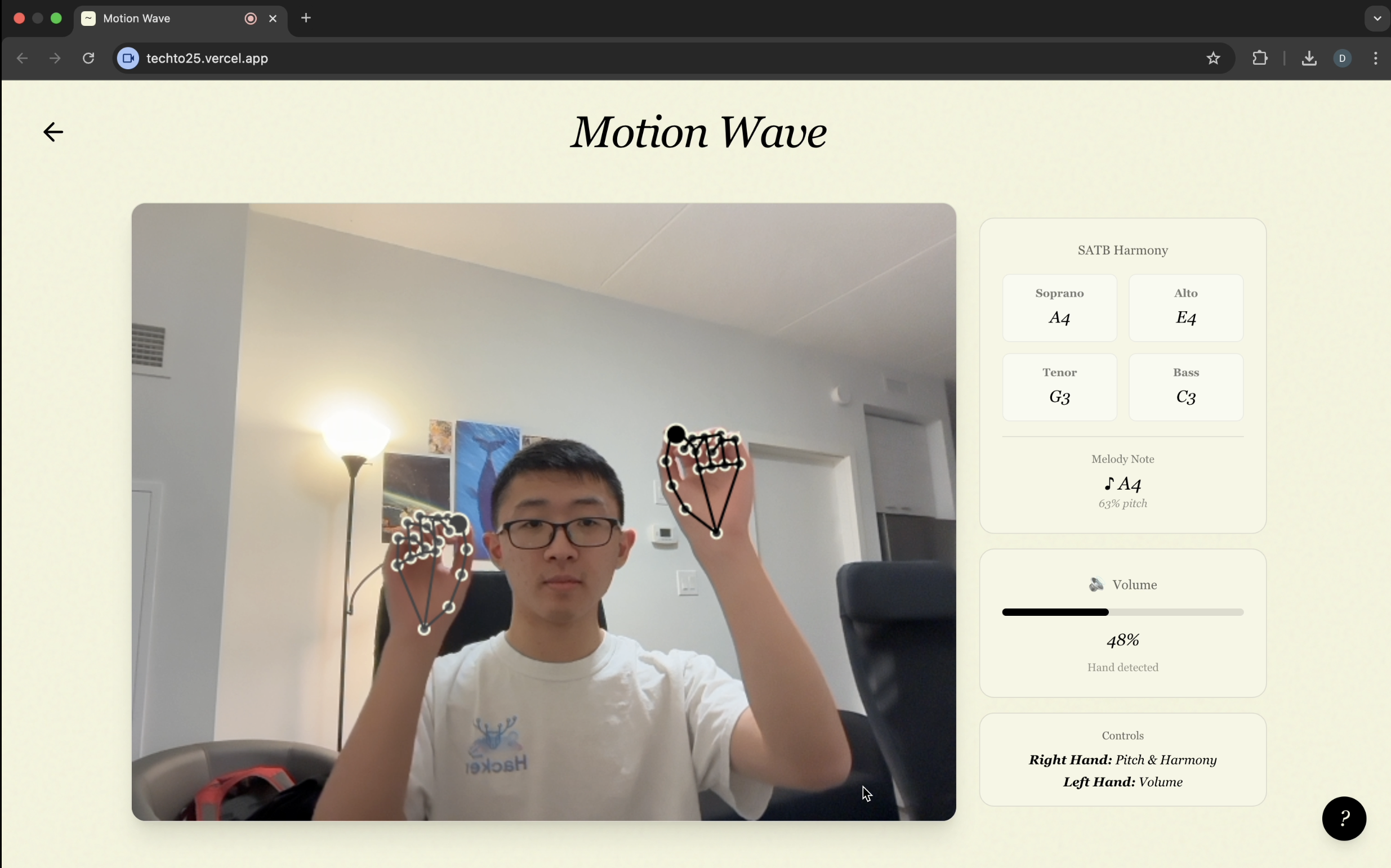

We wanted to create a musical instrument that anyone could play, regardless of musical background. MotionWave uses your webcam to track your hands: move your dominant hand up and down to control pitch, and use your other hand to adjust volume. A 4-part harmony is generated to accompany your melody in real time.

Technical Details

We built the project using Next.js, TypeScript, and Tailwind CSS. The app uses MediaPipe for accurate hand tracking. Then, using the location of your hands, the app controls sound output.

An AI-powered neural network generates real-time 4-part SATB (Soprano, Alto, Tenor, Bass) harmony. This runs in a Web Worker to keep the UI at 60 FPS. When you play a melody note, the worker receives the MIDI pitch and the trained neural network predicts appropriate soprano, alto, tenor, and bass notes that harmonize with your input.

The model is served as WebAssembly for fast inference (harmonizermodule.wasm), with pre-trained weights loaded from harmonizerweights.bin. This helps keep latency under 20ms for real-time responsiveness.